The 0-1 Journey: Pioneering AI-First SaaS in a Hardware Legacy

As a Solo Product Designer, I led the 0-to-1 evolution of ChirpAI, ViewSonic’s first AI-native SaaS. This wasn’t just a design project; it was a digital transformation effort to bridge a hardware-centric legacy with the future of AI-driven classroom intelligence. I orchestrated the entire lifecycle—from decoding fragmented market policies to delivering a pilot-ready MVP.

Key Achievement: Successfully achieving a 100% pilot intent from US school administrators by solving a critical data-visibility problem.

The Challenge: Breaking the “One-Size-Fits-All” Barrier

A Silent Crisis in US K-12 Classrooms

In a typical US classroom of 30 students, teaching is often a "One-to-Many Broadcast." While the teacher delivers a lesson, the "silent majority" of students often fall through the cracks because their individual needs are invisible in real-time.

Quantifying the Gap

- Teacher Burnout: 33% of educators are too overwhelmed by administrative tasks to provide 1-on-1 mentorship.

- Student Isolation: 19% of students navigate their entire learning journey in total silence, receiving zero personalized guidance.

- The Trust Barrier: 25% of students feel no meaningful connection with any adult at school, making them less likely to ask for help.

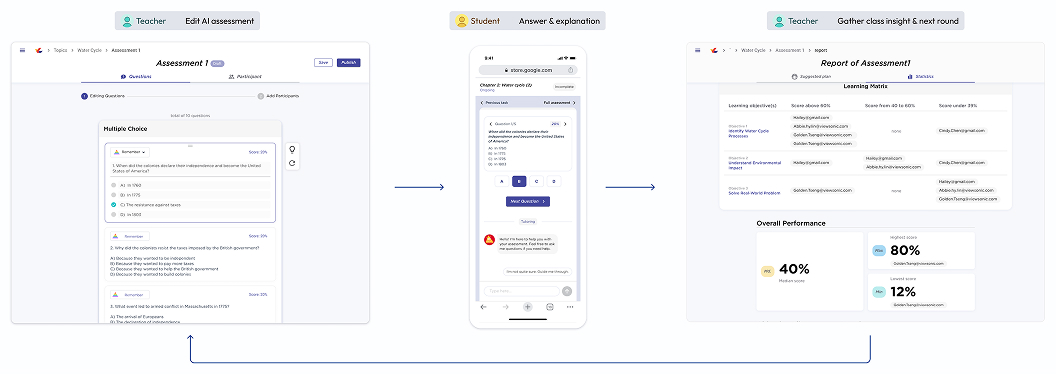

First Concept: The AI-Driven Formative Assessment

To validate our core hypothesis, we architected a “Check for Understanding” (CFU) framework. The goal was to digitize the feedback loop using an AI-mediated system that transforms raw engagement into targeted intervention.

| Phase | AI-mediated Logic | Educational Value |

|---|---|---|

| 1 Assessment Creation | Curriculum-Led Generation: Automated assessment creation based on selected teaching standards. | Removes the manual “prompt engineering” burden for teachers. |

| 2 Student Interaction | Guardrail-First Chatbot: A “Topic-and-Level-Constrained” interface for student engagement. | Ensures pedagogical safety and prevents hallucinations. |

| 3 Insight and Intervention | 3x3 Matrix Analysis: Automated clustering into 9 proficiency tiers for a Second-Round Intervention. | Scales Differentiated Learning without increasing teacher workload. |

Strategic Pivot: Failing Fast to Find PMF

Through early experimentation, we identified critical gaps in our initial direction. These iterations allowed us to refine our product-market fit before scaling.

| Iteration | Hypothesis | The Gap | Strategic Pivot |

|---|---|---|---|

| Trial 1 | Post-Class Assessment: A quiz tool to verify understanding after class. | High Friction: Students felt over-tested; teachers felt over-burdened. Low participation due to lack of instant feedback. | Shift from “Post-class Testing” to “In-class Interaction” to capture real-time engagement. |

| Trial 2 | Topic-Based Insights: A dashboard to cluster performance by topic. | Low Actionability: Required student-platform ecosystem support that was outside the product scope. | Shift from “Data Visualization” to “Actionable Pedagogy” using LLMs to group students for immediate intervention. |

| Trial 3 | 3x3 Granular Matrix: A 9-grid system (High/Mid/Low) to categorize students. | Signal vs. Noise: 1. AI Limits: Dialogue data is too unstructured for accurate 3-tier grading. | Optimized to 2 levels: Priority Topics x Binary Tiers (Emergent Understanding x Deep Comprehension) to ensure accurate, high-stakes intervention. |

From Internal Insights to Market Strategy: Shaping the MVP Direction

The initial pivots were more than internal trial and error. Before committing to the final MVP development, we summarized the internal vision and external market constraints, ensuring we focused and navigated to the right problem with the right technology.

Constraints

- Scenario Fragmentation: Confusion between pre-class, in-class, and after-class user scenarios.

- Market Saturation: A crowded landscape of established AI "test" and "practice" tools.

- Data Scalability: Extracting stable checkpoints is hindered by fragmented regional curricula, requiring extensive timelines for data crawling and RAG architecture development.

Enablers

- Policy & Budget Shifts: Favorable changes in US government educational funding.

- Refining "Insights": The demand for data-driven insights remains strong, provided the content is more actionable and integrated.

Lean Discovery: Rapidly Grounding the Core Value Proposition

To navigate the ambiguity of a new market, I spearheaded a series of Lean Discovery cycles. Rather than pursuing formal academic rigor, we focused on rapidly triangulating high-impact pain points from fragmented market signals. This agile approach allowed us to move fast while ensuring the 0-1 trajectory was anchored in objective US policy and stakeholder needs.

| Studies | Methodology | Design Impact |

|---|---|---|

| Policy & Ecosystem Mapping | Desktop Research: Synthesized complex US frameworks (MTSS, ESSA, SEL) and intervention systems. | Mitigated Early-Stage Risks: Bypassed low-viability directions to establish a validated project foundation. |

| In-Class Activity Habits | Interviews & Routine Field Observations: Mapped activity workflows with teachers and US trainers to identify pain points. | Feature Integration: Standardized UI components and introduced “Exit Ticket” features aligned with curriculum standards. |

| B2B Stakeholder Mapping | Stakeholder Interviews: Analyzed AI perceptions and concerns within the “Class-School-District” hierarchy. | User vs. Buyer Strategy: Visualized ecosystem priorities to distinguish buyers from end-users and define core requirements. |

| Cross-Cultural Concept Testing | Comparative Concept Testing: Evaluated 3 prototype variations to probe instructional mental models and expectations. | Strategic Pivot: Identified a critical cultural gap, shifting the US product strategy from “Control” to “Student Empowerment.” |

From Concept to MVP

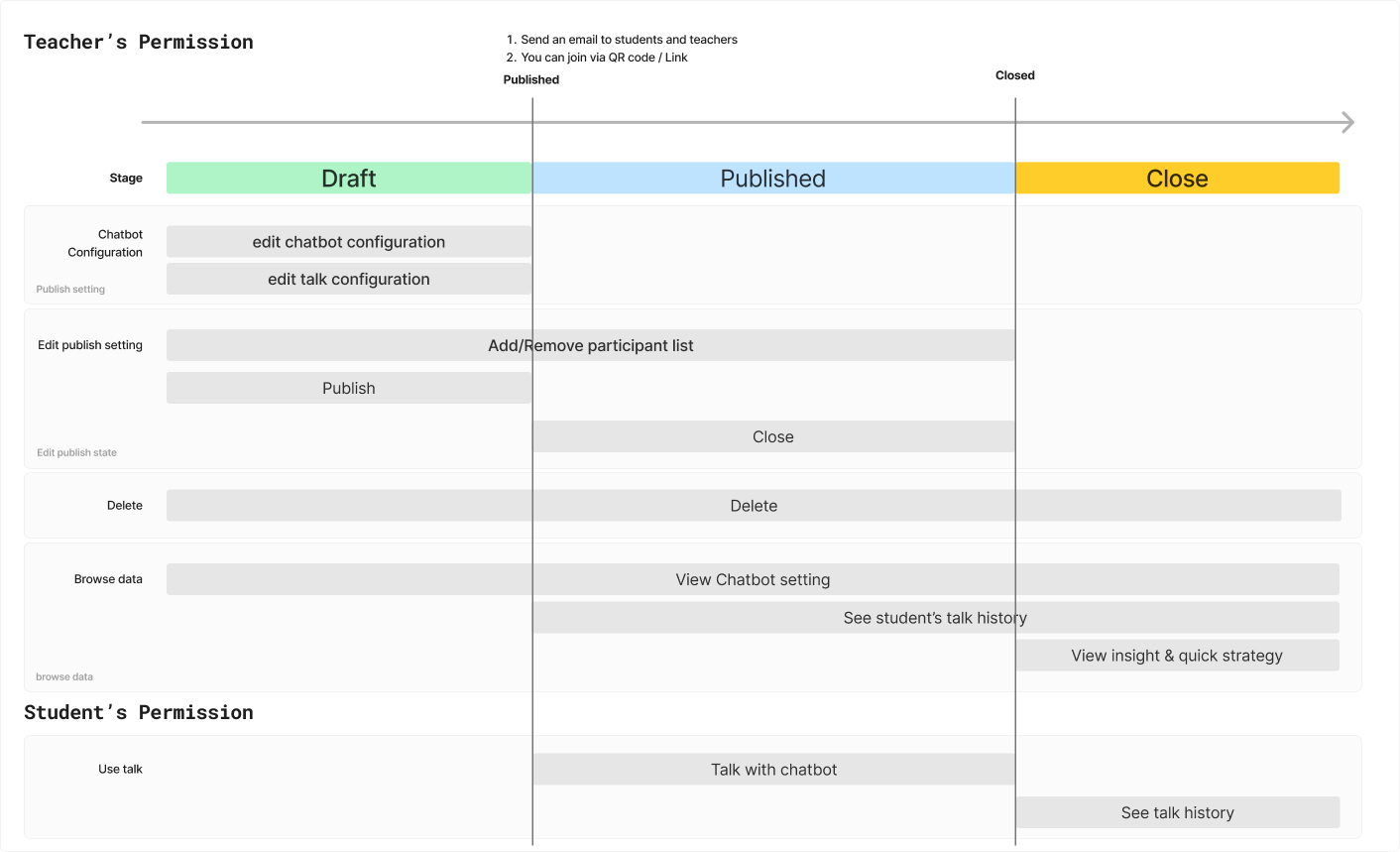

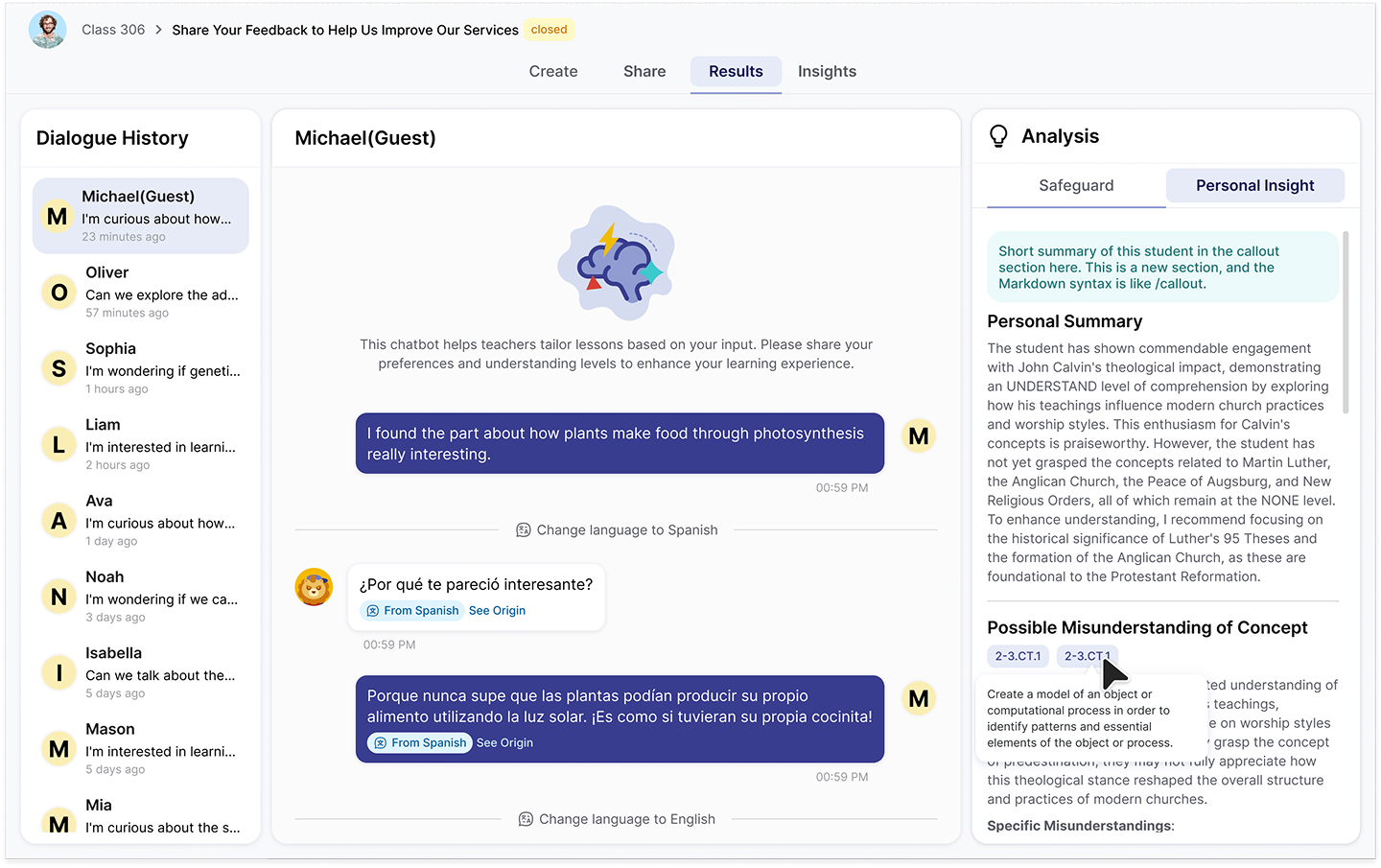

Information Architecture: The “Talk”-Centric Model

Constructed a "one-to-many" interaction model using "Talk" as the foundational unit. By referencing the state management of survey platforms, I designed a scalable flow to handle asynchronous interactions between teachers, students, and AI. This core logic unified the entire system, from sitemaps and permissions to the final UI layout.

Design Highlights

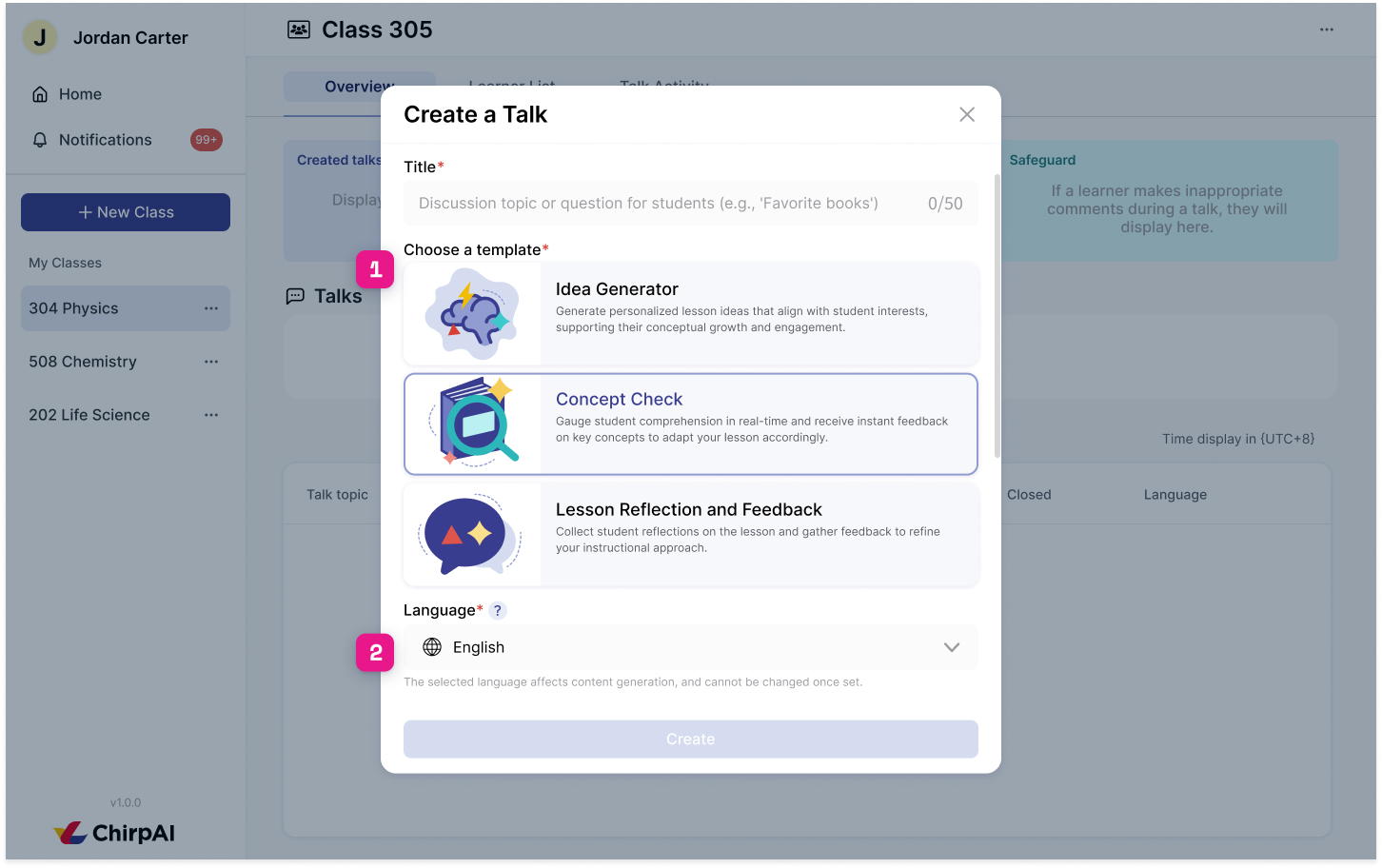

Create: Scenario-Driven Talk Creation

- Streamlined the AI setup process by offering context-specific templates based on core classroom scenarios. This “template-first” approach minimizes decision friction and ensures each session is powered by a tailored AI prompt structure, input logic, and insight goals.

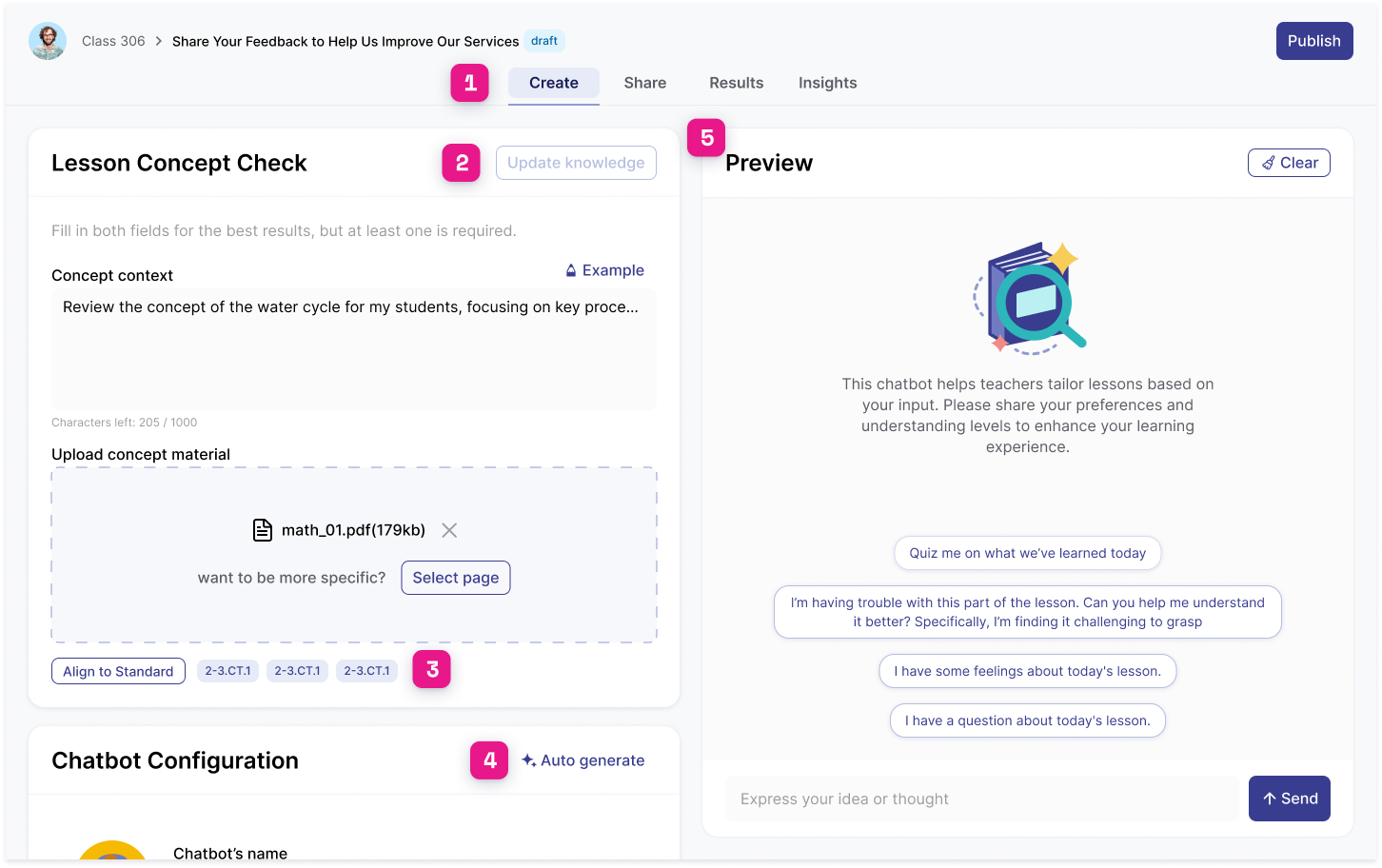

High-Efficiency Configuration Pipeline

- Asynchronous Updates: Teachers can upload materials and refine prompts without freezing the UI.

- AI-Assisted Metadata: The system automatically extracts educational standards and generates chatbot descriptions from uploaded content.

- Live Preview: Integrated a real-time testing panel for instant verification of the AI’s persona and logic.

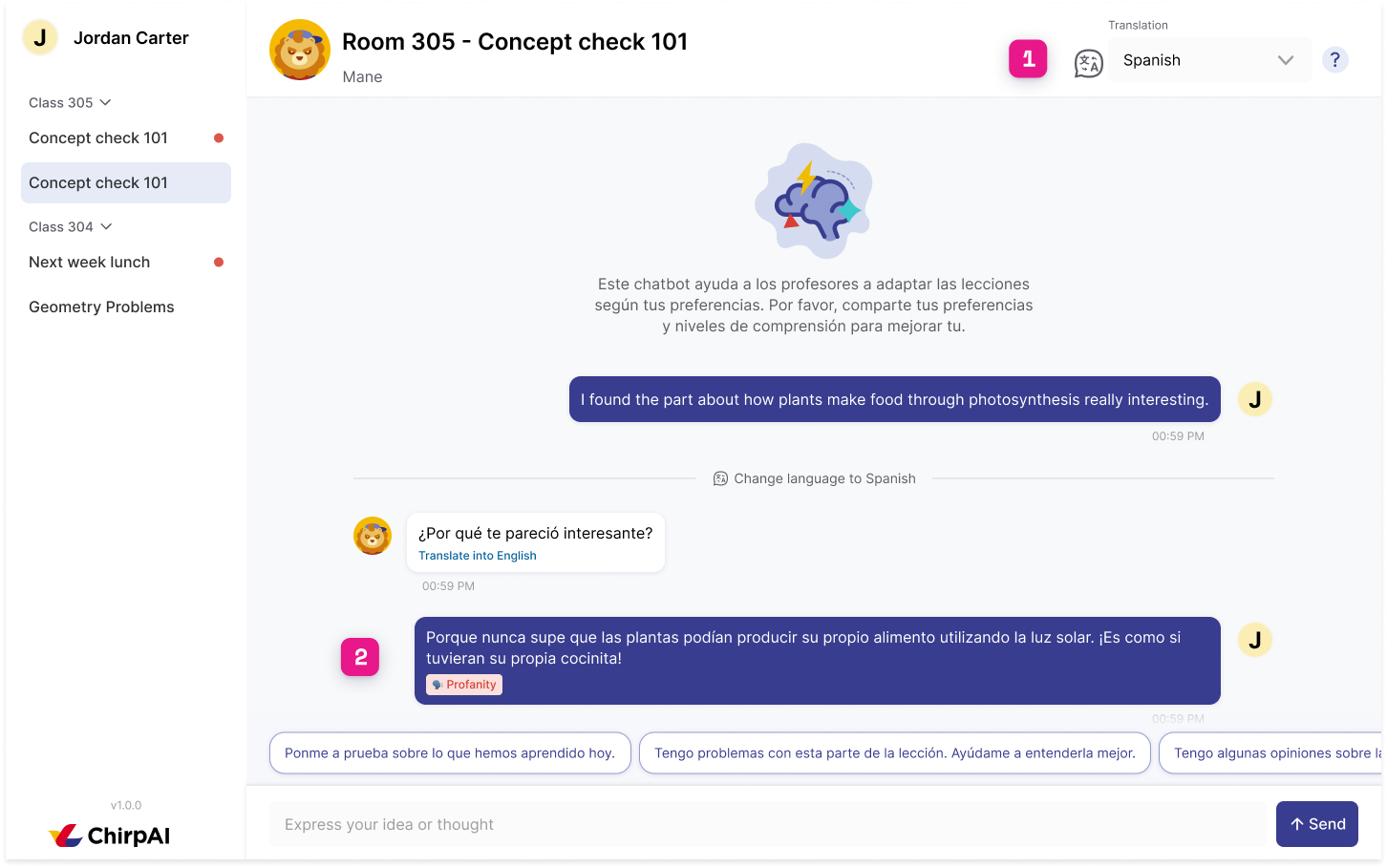

Inclusive & Safe Student Interaction

- Real-time Safeguards: Integrated an AI-driven monitoring system that flags sensitive topics with labels, triggering immediate notifications for teacher intervention.

- Bilingual Support: A “Swift Switch” language toggle allows students to interact in their preferred language while maintaining a unified data view for the teacher.

Real-time Talk Results: Teachers can capture student thinking without closing the session.

- Enabled teachers to capture the “pulse” of the classroom without ending the session. The dashboard provides a Live Activity Feed for instant feedback, while AI automatically summarizes safeguard triggers and extracts key student quotes to highlight learning gaps.

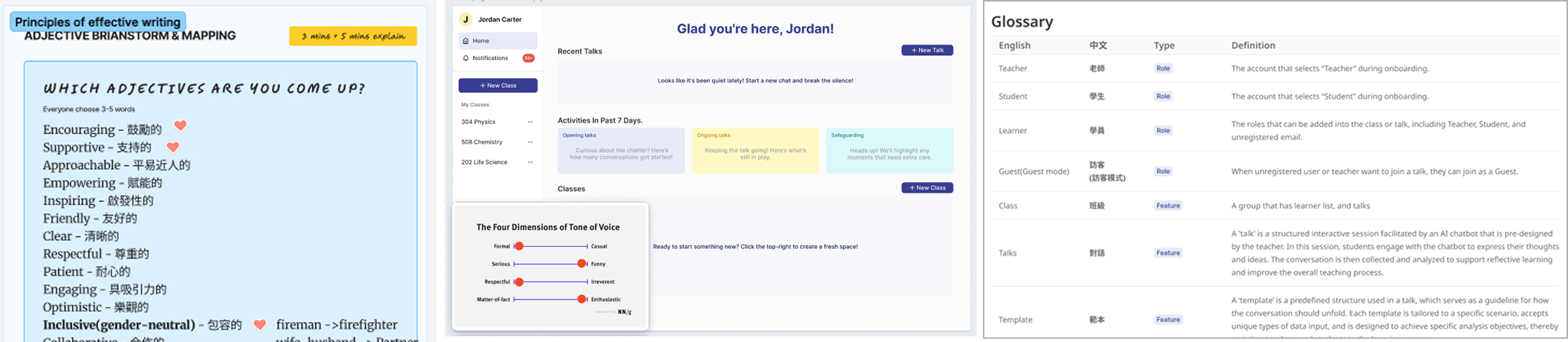

UX Writing Guide for Education

To ensure a consistent and expressive product voice, I established a UX writing framework based on NN/g guidelines, co-developed with a U.S. Education Ph.D. This guide serves as the foundation for our AI’s personality and safety:

- Pedagogical Tone Strategy: Strategic use of encouraging vs. authoritative tones to guide students effectively.

- User-Specific Adaptation: Tailored vocabulary and tone nuances for distinct teacher and student interactions.

- Interaction Safeguards: Defined communication boundaries and “taboos” to ensure AI-user safety and professionalism.

- Linguistic Standards: A centralized system of Voice & Tone adjectives and a product-wide Glossary.

Product Market Fit Evaluation

Hypothesis

- Addressing Differentiated Learning Needs: “In the past, my teaching methods couldn’t effectively accommodate students who were falling behind. ChirpAI provides an excellent channel to bridge that gap.”

- Empowering Student-Centered Teaching to Boost Motivation: “The chatbot guides me on how to express my thoughts, which makes me feel truly understood.”

Recruiting and Study

- Participants were recruited through online seminars, on-site teacher training sessions, product demos, and the ViewSonic US educator community.

- From an initial pool of 49 participants, we collected 10 valid samples (3 online and 7 on-site), including both IT specialists and educators.

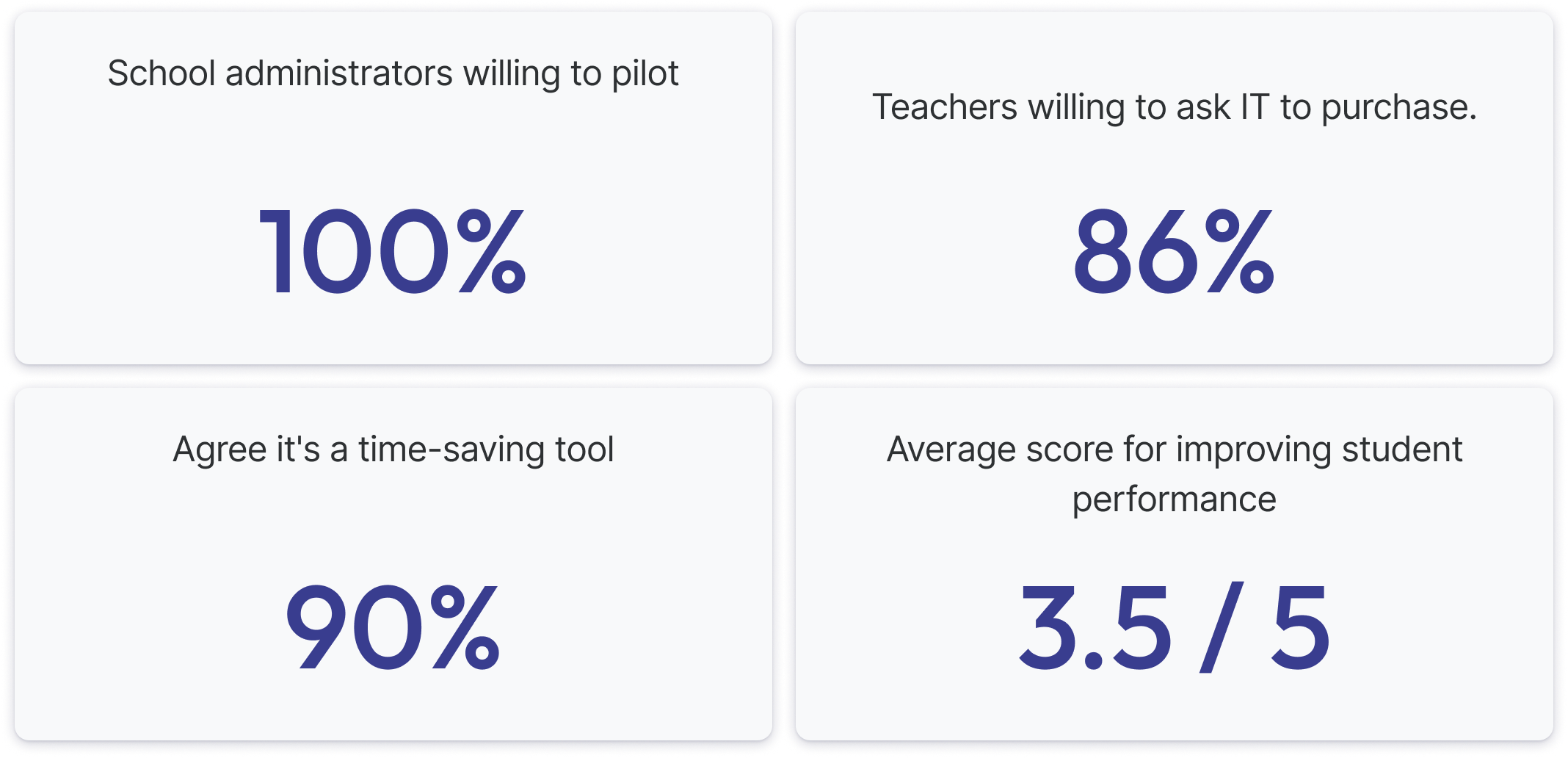

User Validation Results (3 Online + 7 On-site)

Through structured interviews and concept testing, we quantified the product’s impact across three key dimensions:

- 10/10 Pilot Intent: Secured trial commitments from all interviewed US school administrators.

- 6/7 Purchase Advocacy: High intent from trial educators to advocate for institutional procurement.

- 9/10 Efficiency Gain: Users reported a significant reduction in feedback loop and administrative overhead.

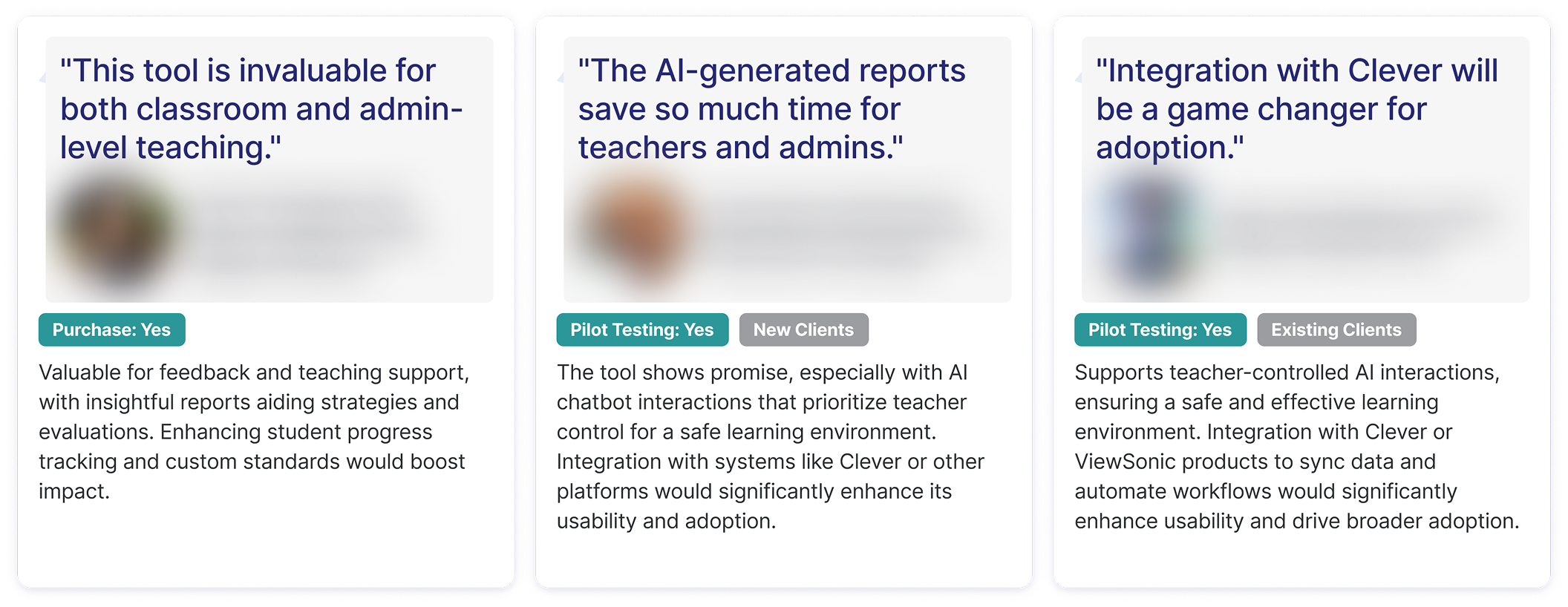

The Core Differentiator

The primary driver behind these results is ChirpAI’s distinction from ChatGPT:

Unlike a standard chatbot, this platform empowers teachers to manage and monitor AI-student interactions in real-time—fostering a safe, pedagogically-sound environment that motivates learning.

Interview Quotes

- Existing ViewSonic customers value the potential for student data integration and future cohesive solutions.

- Non-ViewSonic customers see ViewSonic’s AI innovation as a strong branding opportunity.

Market Traction: Taiwan Pilot

Conversion: 100% (2 out of 2 schools) expressed intent to purchase or collaborate.

Target Audience: International and bilingual schools.

Real-world Adoption: After initial cold outreach and demos, teachers immediately adopted the product, incorporating it into their lesson plans the very next week.

Reflections: My Framework for AI-mediated Interaction

Building ChirpAI taught me that AI success is measured by the quality of human augmentation. I’ve distilled my learning into four pillars:

- Intelligence: Transforming raw data into context-aware insights. True value is created when the user perceives “intelligence” through actionable classroom-bound suggestions.

- Modality: The choice of interaction (chatbot vs. file-input) is a strategic one. It must be dictated by domain-specific scenarios, not default patterns.

- Human-in-the-loop: It’s about the timing of AI intervention. The goal is to substitute manual effort while preserving creative space for user customization.

- AI Ethics: Defining the limit of AI commitment. Trust is built by preventing over-reliance and keeping the AI strictly within pedagogical boundaries.

Final Acknowledgments

This 0-to-1 journey was a rare and rewarding challenge. I am grateful for the opportunity to have shaped the entire user experience from the research phase onward. Deep thanks to my PM, Abbie, for the late-night brainstorming and discussion.